Wwise and ODIN

Integrating ODIN Voice Chat with the Wwise Audio Solution in Unreal.

Introduction

Welcome to this guide on integrating the ODIN Voice Chat Plugin with the Wwise Audio Solution in Unreal. The code used in this guide is available on the ODIN-Wwise Sample Project GitHub Repository.

What You’ll Learn:

- How the

UAkOdinInputComponentscript works and how to use it in your project - Properly set up ODIN in Unreal when using Wwise as audio solution

- Deal with limitations and potential pitfalls

Getting Started

To follow this guide, you’ll need to have some prerequisites:

- Basic knowledge of Unreal as well as Wwise

- The Wwise Plugin for Unreal, which you can get here

- The ODIN Voice Chat Plugin, available here

To set up Wwise in your project, please follow Wwise’s in-depth integration-tutorial. You can find the tutorial here.

To set up the ODIN Voice Chat Plugin, please take a look at our Getting-Started guide, which you can find here:

Begin ODIN Getting Started Guide

This guide will show you how to access the Odin Media Stream and copy it to the Audio Input Plugin of Wwise in order to pass it to the Wwise Audio Engine. This means, we will only cover the receiver-side of the communication - the sender just uses Unreal’s Audio Capture Module and thus is handled no different than any other implementation of Odin in Unreal.

Sample Project

You can find a sample project in a repository in our GitHub account. Feel free to download it and set it up in order to view a working integration of this class in a small sample project. This sample is based on the result of the second video of the Odin tutorial Series.

UAkOdinInputComponent

The UAkOdinInputComponent class is an essential part of the Wwise integration. It replaces the default ODIN UOdinSynthComponent

component, taking over the voice output responsibilities by using Wwise. This script is crucial for receiving voice chat data from the ODIN servers.

The header can be found here and the source file is located here

The UAkOdinInputComponent inherits from Audiokinetic’s AkAudioInputComponent from their Audio Input Plugin. As such it must override the methods FillSamplesBuffer and GetChannelConfig to work with the Wwise Audio Engine. There is a tutorial from Audiokinetic on How to use their Audio Input Plugin in Unreal. The script is a customization of that, replacing the usage of the microphone input by Odin’s Voice Media Stream.

You can either follow the Usage setup to drop the UAkOdinInputComponent directly into your project, or take a look at how it works to adjust the functionality to your requirements.

This is the header:

#include "CoreMinimal.h"

#include "AkAudioInputComponent.h"

#include "AkOdinInputComponent.generated.h"

class OdinMediaSoundGenerator;

class UOdinPlaybackMedia;

UCLASS(ClassGroup=(Custom), BlueprintType, Blueprintable, meta=(BlueprintSpawnableComponent))

class MODULE_API UAkOdinInputComponent : public UAkAudioInputComponent

{

GENERATED_BODY()

public:

// Sets default values for this component's properties

UAkOdinInputComponent(const class FObjectInitializer& ObjectInitializer);

virtual void DestroyComponent(bool bPromoteChildren) override;

UFUNCTION(BlueprintCallable, Category="Odin|Sound")

void AssignOdinMedia(UPARAM(ref) UOdinPlaybackMedia *&Media);

virtual void GetChannelConfig(AkAudioFormat& AudioFormat) override;

virtual bool FillSamplesBuffer(uint32 NumChannels, uint32 NumSamples, float** BufferToFill) override;

protected:

UPROPERTY(BlueprintReadOnly, Category="Odin|Sound")

UOdinPlaybackMedia* PlaybackMedia = nullptr;

TSharedPtr<OdinMediaSoundGenerator, ESPMode::ThreadSafe> SoundGenerator;

float* Buffer = nullptr;

int32 BufferSize = 0;

};

And this is the source file of the class:

#include "AkOdinInputComponent.h"

#include "OdinFunctionLibrary.h"

#include "OdinPlaybackMedia.h"

UAkOdinInputComponent::UAkOdinInputComponent(const FObjectInitializer& ObjectInitializer) : UAkAudioInputComponent(

ObjectInitializer)

{

PrimaryComponentTick.bCanEverTick = true;

}

void UAkOdinInputComponent::AssignOdinMedia(UOdinPlaybackMedia*& Media)

{

if (nullptr == Media)

return;

this->SoundGenerator = MakeShared<OdinMediaSoundGenerator, ESPMode::ThreadSafe>();

this->PlaybackMedia = Media;

SoundGenerator->SetOdinStream(Media->GetMediaHandle());

}

void UAkOdinInputComponent::DestroyComponent(bool bPromoteChildren)

{

Super::DestroyComponent(bPromoteChildren);

if (nullptr != Buffer)

{

delete Buffer;

Buffer = nullptr;

BufferSize = 0;

}

}

void UAkOdinInputComponent::GetChannelConfig(AkAudioFormat& AudioFormat)

{

int NumChannels = 1;

int SampleRate = 48000;

if (GetWorld() && GetWorld()->GetGameInstance()) {

const UOdinInitializationSubsystem* OdinInitSubsystem =

GetWorld()->GetGameInstance()->GetSubsystem<UOdinInitializationSubsystem>();

if (OdinInitSubsystem) {

NumChannels = OdinInitSubsystem->GetChannelCount();

SampleRate = OdinInitSubsystem->GetSampleRate();

}

}

AkChannelConfig ChannelConfig;

ChannelConfig.SetStandard(AK::ChannelMaskFromNumChannels(NumChannels));

UE_LOG(LogTemp, Warning, TEXT("Initializing Ak Odin Input Component with %i channels and Sample Rate of %i"), NumChannels, SampleRate);

// set audio format

AudioFormat.SetAll(

SampleRate, // Sample rate

ChannelConfig, // \ref AkChannelConfig

8 * sizeof(float), // Bits per samples

sizeof(float), // Block Align = 4 Bytes? Shouldn't it be 2*4=8 Bytes, because of two channels?

AK_FLOAT, // feeding floats

AK_NONINTERLEAVED

);

}

bool UAkOdinInputComponent::FillSamplesBuffer(uint32 NumChannels, uint32 NumSamples, float** BufferToFill)

{

if (!SoundGenerator || !PlaybackMedia)

return false;

const int32 RequestedTotalSamples = NumChannels * NumSamples;

if (BufferSize != RequestedTotalSamples)

{

if (nullptr != Buffer)

delete Buffer;

Buffer = new float[RequestedTotalSamples];

BufferSize = RequestedTotalSamples;

}

const uint32 Result = SoundGenerator->OnGenerateAudio(Buffer, RequestedTotalSamples);

if (odin_is_error(Result))

{

FString ErrorString = UOdinFunctionLibrary::FormatError(Result, true);

UE_LOG(LogTemp, Error, TEXT("UAkOdinInputComponent: Error during FillSamplesBuffer: %s"), *ErrorString);

return false;

}

for (uint32 s = 0; s < NumSamples; ++s)

{

for (uint32 c = 0; c < NumChannels; ++c)

{

BufferToFill[c][s] = Buffer[s * NumChannels + c];

}

}

return true;

}

Remember to adjust the Build.cs file of your game module accordingly. We need to add dependencies to “Odin” obviously, but also “OdinLibrary” is needed for the call to odin_is_error(). From Wwise we need the “AkAudio” and “Wwise” Modules in order to work with the Audio Input Plugin. So all in all add these to your Public Dependency Modules:

PublicDependencyModuleNames.AddRange(

new string[]

{

"Core",

"Odin",

"AkAudio",

"Wwise",

"OdinLibrary"

}

);

Usage

The above class uses the Wwise Audio Input Plugin to pass dynamically created Audio Data to the Wwise Engine. So we will need to set this up in the Wwise Authoring Tool and then use the class in your Game Logic properly.

Creating a Wwise Event

To provide Unreal with the correct Wwise Event you need to add an Audio Input Plugin Source to your Soundbank. An example of this can be found in the sample project. To achieve this, you can follow these steps:

- In the “Audio” tab of the Project Explorer right-click on the wanted work unit of the “Actor-Mixer-Hierarchy” and add a “New Child->Audio Input”.

Wwise Step1

- Make adjustments to it like needed in your project.

- Make sure to go to the “Conversion” tab in the Contents Editor and set the Conversion to “Factory Conversion Settings->PCM->PCM as Input” by clicking on the “»” button.

Wwise Step2

- Right-click the newly created source and add a “New Event->Play” to it.

Wwise Step3

- If you have no Soundbank yet, create one.

Wwise Step4

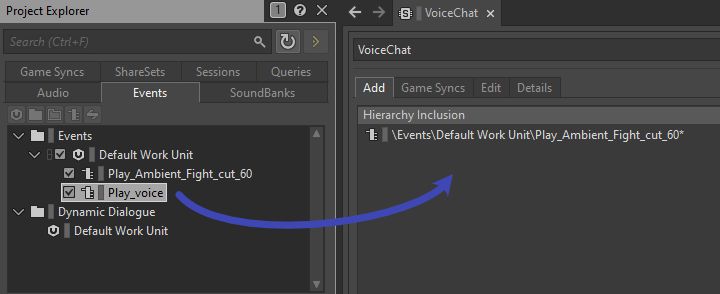

- Lastly you need to add that event to the Soundbank by dragging it from the “Events” tab in the Project Explorer to the Soundbank’s Content Editor.

Wwise Step5

- Export using the Wwise Browser like described in Audiokinetic’s Guide in Unreal and you are good to go! A quick way to import to your Unreal Project is to open it in the Unreal Editor and go to

Window->Wwise Browser. Here you can click onGenerate Soundbanksin the top right corner. Now you should be able to see your definedPlay_voiceevent in the Wwise Browser in theEventsfolder in the tree. Drag and drop it into a folder in your content browser and now you can use it in the Unreal project.

Wwise Step6

Integrating the Input Component in your Unreal Project

In the next step we will now use the created event to play back the incoming Odin Media Stream. Again you can find an example of this in the Odin Client Component of the sample project.

First replace the creation of an OdinSynthComponent that you have placed in the Odin Unreal Guide in your project with the new AkOdinInputComponent.

In the OnMediaAdded event of your Odin implementation in your project you can then set the AkAudioEvent of the created AkOdinInputComponent to the Wwise Event that we imported earlier. Call the Assign Odin Media function that we have declared in the C++ class and pass it the reference to the incoming Media Stream and lastly call PostAssociatedAudioInputEvent. It is important to use that function from the AkAudioInputComponent since any other “Post Event” function will not work with the Wwise Audio Input Plug-in. You can see a sample of the Blueprint implementation below:

Setup for the OnMediaAdded event

Like with the OdinSynthComponent, you can also choose to place the AkOdinInputComponent directly on the Player Character as a component and then reference it in your OnMediaAdded event handler. This way you do not have to create it in the Blueprint and it is easier to change its properties - e.g. its Wwise-specific (attenuation) settings.

How it works

The above class uses the Wwise Audio Input Plugin to pass dynamically created Audio Data to the Wwise Engine. It copies the incoming Audio Stream from Odin to the Input Buffer of the Audio Input Component by Wwise. This is done by inheriting from the UAkAudioInputComponent and overriding the respective methods.

1. Setup

The setup of the UAkOdinInputComponent is done by passing it a reference to the incoming Odin Media Stream. In this guide we have done this via a Blueprint call, but the method can also be called from another C++ Class in your game module.

This method creates a new pointer to a new OdinMediaSoundGenerator and sets its OdinStream to the incoming Media’s handle.

Next, the Wwise Audio Input Plug-In asks for the Channel Configuration, calling GetChannelConfig (this is done from the Wwise Audio Engine, so we do not need to take care of calling this function). Here we set the Audio Format of the AkAudioComponent to a new format with 48k sample rate and non-interleaved floats.

2. Reading and Playing Back ODIN Audio Streams

The FillSamplesBuffer function is called from the Wwise Audio Input Plug-in whenever the playback requests more data for its buffer.

Here the AkOdinInputComponent calls the OnGenerateAudio function of the OdinMediaSoundGenerator. The generated sound is copied into the Buffer.

If any error occurs in that call, the function will return without copying anything and tell the Wwise Audio Plugin that it failed to capture more samples.

Since Wwise only accepts float samples only as non-interleaved data (see here for reference) and the OdinMediaSoundGenerator provides it as interleaved, we need to sort it accordingly into the BufferToFill. This is done with the nested for-loops at the end of the function.

Lastly, if everything worked as expected the function returns true to let Wwise know it can now use the BufferToFill.

Conclusion

This simple implementation of an Odin to Wwise adapter for Unreal is a good starting point to give you the control over the audio playback that you need for your Odin Integration in your project. Feel free to check out the sample project in our public GitHub and re-use or extend any code to fit your specific needs.

This is only a starting point of your Odin Integration with Wwise and the Unreal Engine. Feel free to check out any other learning resources and adapt the material like needed, e.g. create realistic or out of this world dynamic immersive experiences with Wwise Spatial Audio aka “proximity chat” or “positional audio”: